Building trust in healthcare AI: five key insights from the 2025 Future Health Index

May 16, 2025 | 3 minute read

AI is already helping healthcare providers tackle their most pressing challenges, but its full potential remains untapped. The key to wider adoption? Trust. The 2025 Future Health Index – the largest global survey of its kind – asked patients and healthcare professionals how they view the potential of AI and how comfortable they are with its use. Here’s what we found – and why we believe trust and innovation must go hand in hand to unlock AI’s promise in healthcare.

Automating administrative tasks. Expanding care capacity. Reducing patient waiting times. According to our 2025 Future Health Index survey, these are among the top benefits healthcare professionals see in using AI in their departments. And they couldn’t be timelier. The need to transform healthcare has never been more urgent, with staff shortages, rising costs, and systemic inefficiencies straining healthcare systems around the globe. Yet as AI’s role in society rapidly expands, so does public skepticism. Despite its benefits, people’s trust in AI is declining, with growing concerns over its safety, reliability and potential unintended consequences. Nowhere are these concerns more critical than in healthcare, where people’s health and well-being are at stake. Our survey confirms this trust gap: while 79% of healthcare professionals are optimistic that AI could improve patient outcomes, only 59% of patients share that optimism. Most patients welcome the use of AI for administrative tasks such as making appointments or checking in. But as its use moves into clinical areas – and the stakes rise – their comfort drops. More than half (52%) worry about losing the human touch in their care. Healthcare professionals, too, express concerns – particularly around data bias and liability risks. In a sector built on human connection, trust in AI must be earned at every step. The question is: how? Our survey findings offer five important clues for building trust in healthcare AI.

1. Patients are open to AI – if it improves and humanizes care

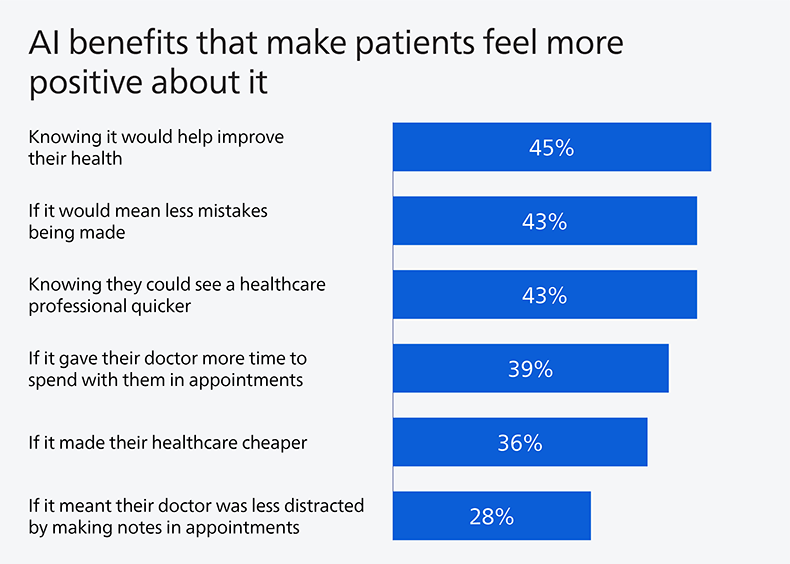

To understand what would foster patients’ trust in healthcare AI, we asked them directly. Their response was clear: they want AI to work safely and effectively, supporting better health outcomes and reducing errors. Patients are also more open to AI when it frees up doctors for personal interactions, easing their fears of a less human healthcare experience as technology becomes more prevalent. Used correctly, AI has the potential to make healthcare more personal, not less – and that’s exactly what patients are asking for.

2. As patient awareness of AI grows, so does their demand for reassurance

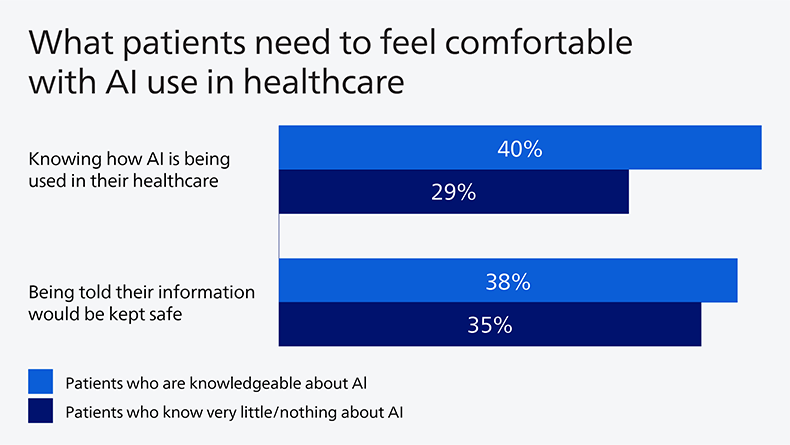

Unsurprisingly, patients in our survey who feel more knowledgeable about AI tend to be more comfortable with its use. However, these patients also seek stronger assurances – whether it’s about understanding how AI is used or about the safety of their data. Knowledgeable patients may be more aware of AI’s potential benefits, resulting in higher comfort levels, but they’re also more conscious of its risks and limitations, leading to a stronger demand for transparency and control.

3. Patients trust healthcare professionals more than any other source on AI

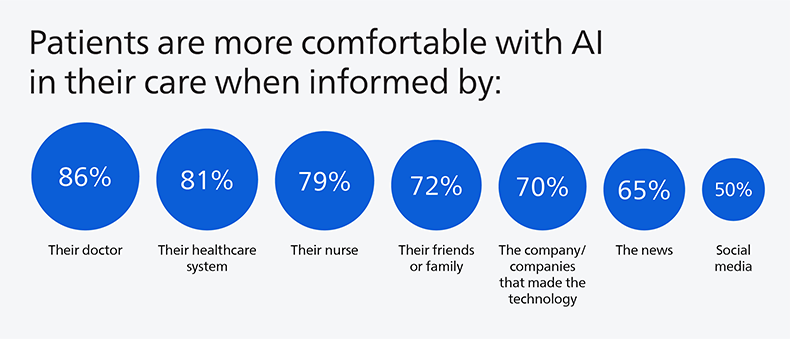

When it comes to healthcare AI, who do patients trust? Our findings show that patients, regardless of their level of knowledge about AI, prefer to receive information and reassurance from their doctors, healthcare systems, and nurses. This mirrors a 2024 Gallup poll in the US, which found that, despite an overall decline in public trust, healthcare professionals remain among the most trusted professions, with nurses holding the top spot. By leveraging their established relationships and credibility, healthcare professionals can guide patients through the integration of AI in their care, mitigating concerns and fostering comfort in the use of these technologies.

4. Technology must serve healthcare professionals, not burden them

Patients aren’t the only ones with concerns. While healthcare professionals are optimistic about AI’s potential, they remain critical of how useful new digital health technologies, including AI, are in everyday practice. Despite 69% of healthcare professionals being involved in developing these technologies, only 38% feel they are designed with their needs in mind. This highlights a significant gap in translating clinical needs into practical solutions that serve their intended purpose. Involving healthcare professionals from the beginning and throughout the development process is essential for building trust and acceptance. AI solutions should seamlessly fit into healthcare workflows and IT infrastructures, creating frictionless experiences for healthcare professionals rather than adding to existing burdens.

5. Healthcare professionals call for clear guardrails

Another key concern for healthcare professionals is accountability: who’s responsible if an AI system makes a diagnostic or treatment error? With issues like hallucinations in generative AI affecting accuracy, our survey suggests that uncertainty around legal responsibility remains a major barrier to adoption. Bias is another worry. Many healthcare professionals fear that AI could reinforce existing disparities in care due to biased data. To address these concerns, they’re calling for clarity on legal liability when using AI (38%), clear guidelines on AI’s use and limitations (38%), robust scientific evidence of its effectiveness (37%), and continuous monitoring to ensure it remains reliable over time (36%). Interestingly, reassurance about job security ranks lowest on their list (23%) – an encouraging sign that many now see AI as a complement to their expertise, not a threat to it.

The bottom line: Trust in healthcare AI can only be built in collaboration

The data in our report is clear: AI is no longer a nice-to-have – it’s a necessity to prevent healthcare systems from buckling under growing demand. But without trust from the people who rely on it for their care, the full potential of AI in healthcare cannot be realized. That’s why taking a responsible and collaborative approach to AI innovation is more important than ever. AI should enhance, rather than erode, the trusted relationships between patients and healthcare professionals. It must deliver tangible benefits, be anchored in robust safeguards, and operate within clear, consistent regulatory frameworks. Only then can AI earn the trust it needs to drive meaningful transformation in healthcare. That doesn’t mean slowing down AI innovation – it means accelerating it in the right direction, bringing life-saving AI solutions to more people, faster, while fostering trust. To achieve this, we must act together across disciplines, institutions and borders. Our report offers insights to drive that collaboration. We hope it sparks the conversations and action needed to drive meaningful change across the healthcare sector.

Share this page with your network