By Henk van Houten

Executive Vice President, Chief Technology Officer, Royal Philips

Headlines featuring phrases like “AI beats doctors” or “AI beats radiologists” are capturing the public imagination, boasting the impressive abilities of AI algorithms in potentially life-saving tasks such as detecting breast cancer or predicting heart disease. While such headlines reveal how much exciting progress has been made in AI, in a way they are also misleading – because they suggest a false dichotomy. It’s true that an AI algorithm must perform on par or even better than a human expert on a specific task in order to be of value in healthcare. But it’s not actually a matter of one “beating” the other. A lot of the decisions that clinicians make on a daily basis are incredibly complex, and, as I will argue, require more than an AI- or data-driven approach alone. Both clinicians and AI have their unique strengths and limitations. It’s their joint intelligence that counts if we want to make a meaningful difference to patient care. Here’s why.

The promise of AI: an extra set of superhuman eyes

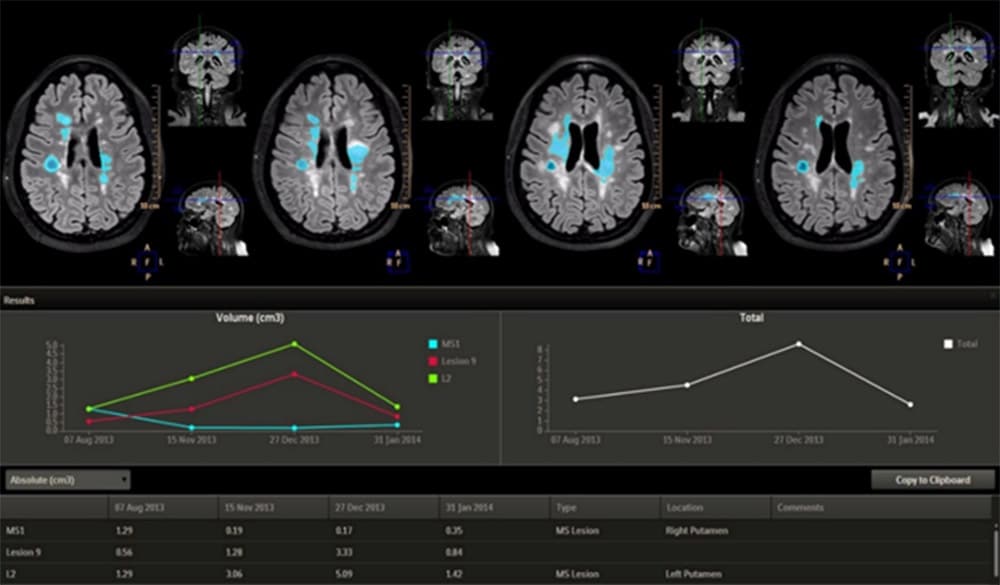

To unravel the complementary nature of AI and human expertise in healthcare, let’s start with a short primer on AI and its modern-day poster child: deep learning. What sometimes gets glossed over in popular discourse is that AI, as defined here, has already been with us for decades. Ironically, early AI systems strongly relied on expert knowledge, which was hard-coded into computer software. In recent years, however, a data-driven approach to AI has taken precedence, thanks to the explosion of digital data that has fueled the advent of deep learning – which is also the focus of this article. Deep learning algorithms learn from vast amounts of annotated data, often with superior accuracy, but without being explicitly pre-programmed [1]. The main strength of deep learning is that it can detect, quantify, and classify even the subtlest of patterns in data – offering an extra set of “superhuman” eyes. This makes deep learning algorithms ideally suited to support pattern-centric medical disciplines such as radiology and pathology, which face ever-mounting workloads coupled with severe and growing staff shortages in many parts of the world [2]. The examples are as varied as they are promising. Deep learning algorithms can relieve time-pressured professionals from repetitive and laborious tasks such as segmenting lesions in the lungs in CT scans [3], tracking how brain tumors develop over time in MRI scans to help monitor disease progression [4], or grading the severity of prostate cancer based on tissue specimens [5].

AI can help track the volume of brain tumors over time by extracting information from MRI images This is where the benefits of AI clearly shine through. By automating lower-level tasks, AI can free up time for clinicians to focus on higher-level, value-adding tasks and patient interaction. But when it comes to diagnostic decision-making, the stakes go up – and the picture gets more complex.

Two pairs of eyes see more than one – the need for human oversight

We know that even the most experienced clinicians may overlook things. Missed or erroneous diagnoses still occur. In fact, one study by the National Academy of Sciences in 2015 showed that most people will experience at least one diagnostic error in their lifetime [6]. The truth is, however, that AI algorithms will never be infallible either. They may err, too. Even if it’s only in 1 out of 100 cases. Clearly, that is a risk we need to mitigate when an algorithm is used to assess whether a patient is likely to have a malignant tumor or not. That’s why human oversight is essential, even when an algorithm is highly accurate. Applying two pairs of eyes to the same image – one pair human, one pair AI – can create a safety net, with clinician and AI compensating for what the other may overlook.

Applying two pairs of eyes to the same image – one pair human, one pair AI – can create a safety net, with clinician and AI compensating for what the other may overlook.

One recent study in Nature shows how such a human-AI partnership could work in the detection of breast cancer [7].

Breast cancer is the second leading cause of death from cancer in women. Fortunately, early detection and treatment can considerably improve outcomes. Interpretation of mammograms remains challenging, however, with human experts achieving varying levels of accuracy. Having two radiologists read the same mammogram has therefore long been an established practice in the UK and many European countries, resulting in superior performance compared to a single-reader paradigm. Yet such a double-reading paradigm has also been contested, with some arguing it takes up a prohibitive amount of – already scarce – resources. What if, instead of relying on two human experts, we were to replace one with AI, while still keeping the other expert in the loop for human oversight? According to the findings published in Nature, this would not lead to inferior performance compared to the traditional double-reading paradigm with two human readers. That means radiologist workload could be considerably reduced with the support of AI, while preserving expert judgment as part of the process to detect cancers the algorithm may have missed. It’s a compelling example of the power of human-AI partnership. And there are other collaborative models which also rest on the same four-eyes principle. AI can act either as a first reader, a second reader, or as a triage tool that helps prioritize worklists based on the suspiciousness of findings [8]. Yet to truly understand why we cannot rely on algorithms alone when it comes to clinical decision-making, we need to probe even deeper – and examine where they may fall short.

Deep learning is not deep understanding

As explained before, deep learning algorithms are able to uncover even the subtlest of patterns in data, unhindered by human limitations in perceptual acuity or the constraints of prior knowledge. That’s what makes them so valuable, allowing us to see more than we ever could with our own eyes. But there’s a flipside to that coin. As accurate as deep learning algorithms may be in performing a clearly-defined task such as detecting breast tumors of a certain size or shape, they do not develop a true understanding of the world. They don’t have the ability to reason or abstract beyond the data they were trained on. They are only smart in a narrow sense. And that means we need to be careful in how we apply them.

Deep learning algorithms allow us to see more than we ever could with our own eyes. But unlike human experts, they do not develop a true understanding of the world.

A cautionary example from the AI literature is a study into visual recognition of traffic signs. An algorithm was trained to recognize STOP signs in a lab setting, and did so admirably well – until it encountered STOP signs that were partly covered with graffiti or stickers. Suddenly, the otherwise accurate algorithm could be tricked to misclassify the image – mistaking it for a Speed Limit 45 sign in 67% of the cases when it was covered with graffiti, and even in 100% of the cases when it was covered with stickers [9].

A deep learning algorithm trained to recognize STOP signs can be tricked into misclassifying a STOP sign partly covered with graffiti or stickers as a Speed Limit 45 sign. Source: Eykholt, K., Evtimov, I., Fernandes, E., et al. (2018) (read full publication) You or I would never confound a STOP sign and a speed limit sign. That’s because, as people, we have a robust conceptual understanding of both, based on defining features such as their shape and color. But the algorithm “saw” only patterns of pixels, making it falter in the face of deviations from the data that it was trained on. Of course, such deliberate attempts to fool an algorithm may not be of concern to medical applications as long as data are properly secured. What this example nevertheless highlights is how a pure reliance on pattern recognition can lead algorithms astray when they encounter unfamiliar data. Will an algorithm that performs well in one hospital be as accurate in another hospital? Will it generalize well from one population to the next? These are critical questions that require expert judgment and validation. Again it goes to show why AI and deep clinical knowledge need to go hand in hand – even before an algorithm is put to use in clinical practice.

Will an algorithm that performs well in one hospital be as accurate in another hospital? Will it generalize well from one population to the next? These are critical questions that require expert judgment and validation.

With appropriate human oversight and a proper understanding of an algorithm’s strengths and limitations, it can be used in a way that is effective, safe, and fair. But there’s one more caveat.

Medicine is more than pattern recognition

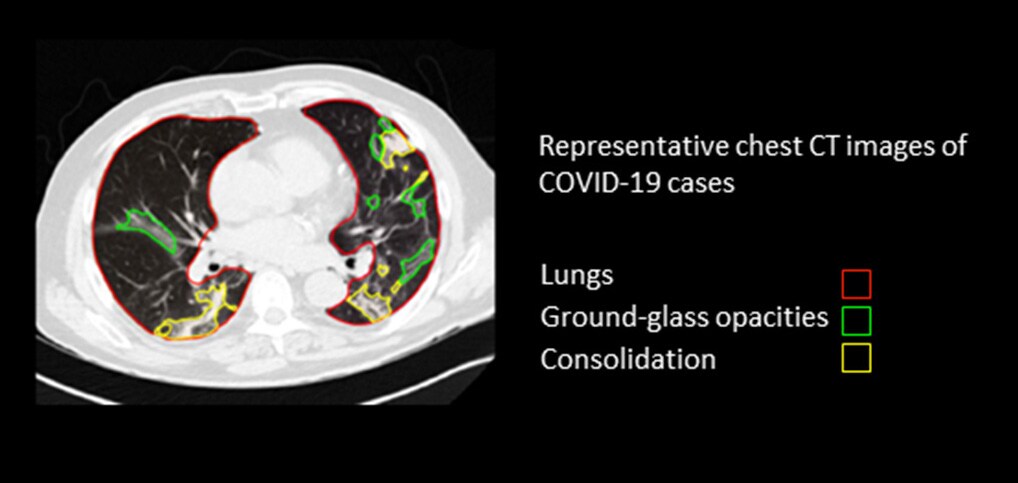

We’ve seen how pattern recognition lends itself well to algorithmic optimization, provided the right guardrails are in place. Clinicians, however, have a much wider task that goes beyond mere pattern recognition. Take a CT scan of the lungs as an example. Algorithms can help detect and segment areas of the lungs that show increased density, such as so-called ground glass opacities or consolidations. Yet these anomalies may point to a wide range of medical conditions. They could be the result of a viral infection (possibly but not necessarily COVID-19), a non-infectious disease, or a pulmonary contusion – to name just a few possibilities. To find out what ails the patient, the radiologist or referring clinician will assess other (non-imaging) types of data as well, and connect them to the patient’s history, current symptoms, and possible co-morbidities.

Ground glass opacities and consolidations in a CT scan of the lungs are a non-specific finding that can point to multiple conditions (including but not limited to COVID-19), leaving it to the clinician to draw conclusions from other types of information as well It’s this sort of holistic interpretation where the contextual understanding of the clinician truly comes into play. Deciding on diagnosis and treatment requires a sensitivity to the patient as a unique human being; the ability to look beyond patterns of pixels and see the full person in context. It’s a process that is notoriously difficult to emulate algorithmically.

Deciding on diagnosis and treatment requires a sensitivity to the patient as a unique human being; the ability to look beyond patterns of pixels and see the full person in context.

Deep learning relies on large, structured data sets. But when it comes to a complex medical diagnosis that involves many different factors, the number of patients for whom the complete set of data is available is often too limited to build a sufficiently reliable predictive algorithm [10]. Of course, as technology evolves and we manage to successfully address some of the data challenges in healthcare, AI’s ability to make meaningful predictions from complex and multimodal data will continue to grow. Yet still, there may not always be prior examples for deep learning algorithms to build on. A patient may present with a unique combination of symptoms. A new drug may have unforeseen side effects. Such novel variations occur all the time in clinical practice; defying what can be algorithmically derived from historical data. Or as AI pioneer Gary Marcus puts it: “in a truly open-ended world, there will never be enough data [1].” This raises even more fundamental questions about the complementary nature of AI and human expertise – and the future course of AI innovation we set for ourselves. In our excitement about the possibilities of deep learning, have we become too one-sidedly focused on big data as the fuel of all AI innovation? What about the rich body of clinical and scientific knowledge that we have already acquired over the ages? Can we find new and creative ways to bring together the best of both worlds? These are questions I will explore in more detail in a future article, and I very much welcome your thoughts as well – because I truly believe there is never one single approach that renders all others useless or obsolete. Medicine is complex. Intelligence is multifaceted. Only by acknowledging this complexity can we advance healthcare in a meaningful way – combining the best of what human experts and AI have to offer, and ultimately allowing them to deliver better patient care together. References [1] Marcus, G., Davis, E. (2019). Rebooting AI: building artificial intelligence we can trust. [2] Topol, E. (2019). Deep medicine: how artificial intelligence can make healthcare human again. [3] Aresta, G., Jacobs, C., Araújo, T. et al. (2019). iW-Net: an automatic and minimalistic interactive lung nodule segmentation deep network. Sci Rep 9, 11591. https://www.nature.com/articles/s41598-019-48004-8 [4] https://www.fiercebiotech.com/medtech/philips-gets-fda-nod-for-radiology-informatics-platform [5] Nagpal, K., Foote, D., Liu, Y. (2019). Development and validation of a deep learning algorithm for improving Gleason scoring of prostate cancer. npj Digit Med 2:48 https://www.nature.com/articles/s41746-019-0112-2 [6] The National Academies of Science Engineering Medicine (2015). Improving diagnosis in health care. https://www.ncbi.nlm.nih.gov/books/NBK338596/ [7] McKinney, S.M., Sieniek, M., Godbole, V. et al. (2020) International evaluation of an AI system for breast cancer screening. Nature 577, 89–94 https://pubmed.ncbi.nlm.nih.gov/31894144/ [8] Obuchowski, N.A., Bullen, J.A. (2019). Statistical considerations for testing an AI algorithm used for prescreening lung CT images. Contemporary Clinical Trials Communications 16:100434 https://www.sciencedirect.com/science/article/pii/S2451865419301966 [9] Eykholt, K., Evtimov, I., Fernandes, E., et al. (2018) Robust physical-world attacks on deep learning models. arXiv preprint arXiv:1707.08945 https://arxiv.org/abs/1707.08945 [10] Van Hartskamp, M., Consoli, S., Verhaegh, W., Petkovic, M., Van de Stolpe, A. (2019). Artificial Intelligence in clinical healthcare applications: viewpoint. Interact J Med Res, 8(2): e12100. https://pubmed.ncbi.nlm.nih.gov/30950806/

Share on social media

Topics

Author

Henk van Houten

Former Chief Technology Officer at Royal Philips from 2016 to 2022