As artificial intelligence (AI) is beginning to make its mark on healthcare, questions around the ethical and responsible use of AI have moved to the forefront of debate. To what extent can we rely on AI algorithms when it comes to matters of life and death or personal health and well-being? How do we safeguard that these algorithms are used for the intended purposes? And how do we ensure that AI does not inadvertently discriminate against specific cultures, minorities, or other groups, thereby perpetuating inequalities in access and quality of care? These questions are far too important to be answered in hindsight. They compel us to think through proactively – as an industry and as individual actors – how we can best advance AI in healthcare and healthy living to the benefit of consumers, patients, and care professionals, while avoiding unintended consequences.

How we can best advance AI in healthcare and healthy living to the benefit of people, while avoiding unintended consequences?

As AI in healthcare and personal health applications often involves the use of sensitive personal data, a key priority is obviously to use that data responsibly. Data must be kept safe and secure at all times, and processed in compliance with all relevant privacy regulations. At Philips, we have captured this in our Data Principles. Yet because AI is set to fundamentally change the way people interact with technology and make decisions, it requires additional standards and safeguards. Data policies alone are not enough. This led us to develop a set of five guiding principles for the design and responsible use of AI in healthcare and personal health applications – all based on the key notion that AI-enabled solutions should complement and benefit customers, patients, and society as a whole.

What does each of these principles entail and why are they so important?

1. Well-being

AI-enabled solutions should benefit the health and well-being of individuals and contribute to the sustainable development of society. As with any technological innovation, first and foremost we should ask ourselves what human purpose AI is meant to serve. The goal should not be to advance AI for AI’s sake – it should be to improve people’s well-being and quality of life. With the rising global demand for healthcare and a growing shortage of healthcare professionals, there is a clear need for AI to help alleviate overstretched healthcare systems. Much has already been written about how AI can act as a smart assistant to healthcare providers in diagnosis and treatment – all with the potential to improve health outcomes at lower cost, while improving staff and patient experience (known as the Quadruple Aim). But to truly benefit the health and well-being of people for generations to come, we need to think beyond the current model of reactive ‘sick care’. With AI, we can move toward true, proactive health care. At every step in people’s lives – from cradle to old age – AI-powered applications and health services could inform and support healthy living with personalized coaching and advice, preventing as well as treating disease.

With AI, we can move from reactive sick care to proactive health care.

This should not be the privilege of the happy few. In line with the UN’s Sustainable Development Goals, I believe we must take an inclusive approach that promotes access to care and healthy lives for all. In many developing countries today, large inequalities exist between urban and rural health services. AI, combined with other enabling technologies such as Internet of Things and high-bandwidth telecommunication technologies like 5G, could help reduce these inequalities by making specialist medical knowledge available in rural areas [1].

2. Oversight

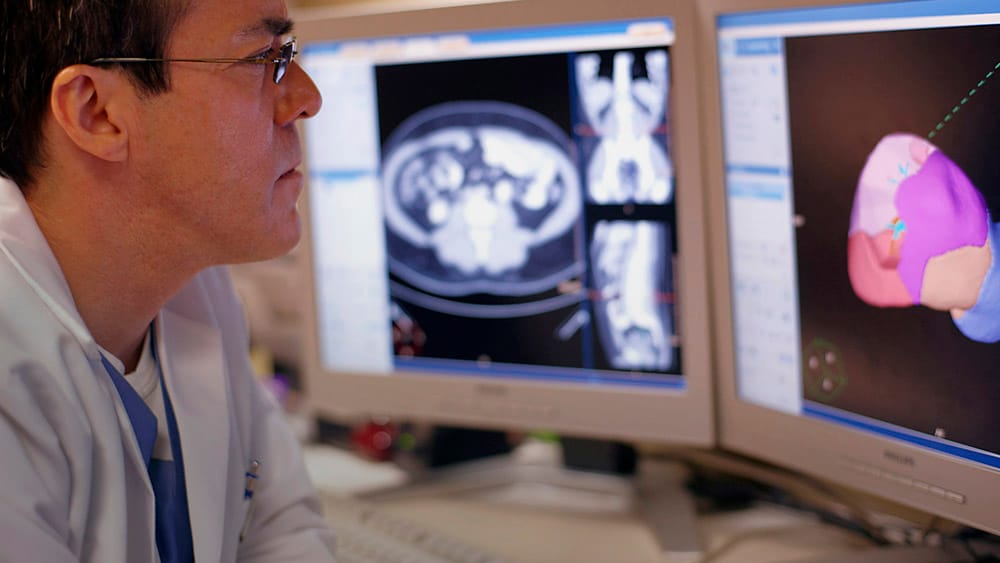

AI-enabled solutions should augment and empower people, with appropriate supervision. Now that we have established human well-being as the guiding purpose for the development of AI, how do we ensure that AI delivers on that goal? As I have argued before, AI augments human skills and expertise but doesn’t make them any less relevant. Especially in the complex world of healthcare, where lives are at stake, AI tools such as machine learning require human domain knowledge and experience to put their outcomes in context. A classic example from the medical literature can help to illustrate this – showing what could go wrong if we were to blindly rely on algorithms. In the mid-90s, a multi-institutional research team created a machine learning model to predict the probability of death for patients with pneumonia. The goal was to ensure that high-risk patients would be admitted to the hospital, while low-risk patients could be treated as outpatients. Based on the training data, the model inferred that patients with pneumonia who have a history of asthma have lower risk of dying from pneumonia than the general population – a finding that flies in the face of common medical knowledge [2]. Without a deeper understanding of the data, this could have led to the erroneous conclusion that pneumonia patients with a history of asthma did not need hospital care as urgently. But this couldn’t have been further from the truth. What actually explained the pattern in the data is that patients with a history of asthma usually were admitted directly to the Intensive Care Unit when they presented at the hospital with pneumonia. This lowered their risk of dying from pneumonia, compared to the general population. If they hadn’t received such proactive care, they would in fact have had a much higher risk of dying. There was nothing wrong with the machine learning model per se – but the correlation that it had identified based on the training data set was dangerously misleading. This example shows how crucial it is that the data-crunching power of AI goes in hand in hand with domain knowledge from human experts and established clinical science. One cannot go without the other.

The data-crunching power of AI must go hand in hand with domain knowledge from human experts and established clinical science.

In medical research, it is therefore essential to bring together AI engineers, data scientists, and clinical experts. This helps to ensure proper validation and interpretation of AI-generated insights. Once an AI application has made its way into clinical practice, it can be a powerful decision support tool – but it still requires human oversight. Its findings and recommendations need be to judged or checked by the clinician, based on a holistic understanding of the patient context.

3. Robustness

AI-enabled solutions should not do harm and should have appropriate protection against deliberate or inadvertent misuse. To promote the responsible application of AI, we also need to put safeguards in place to prevent unintended or deliberate misuse. Having a robust set of control mechanisms will help to instill trust while mitigating potential risks. This is particularly important because misuse may be subtle and could occur inadvertently – with the best of intentions. For example, let’s say an algorithm is developed to help screen for lung cancer using low-dose CT scans. Once the algorithm has proven effective for this application, with a high sensitivity for spotting possible signs of lung cancer, it may seem obvious to extend its use also to a diagnostic setting. But for effective and reliable use in that setting, the algorithm may need to be tuned differently, to increase specificity for ruling out people who do not actually have lung cancer. A totally different algorithm could even perform better. Different settings, different demands. One way of preventing (inadvertent) misuse is to monitor the performance of AI-enabled solutions in clinical practice – and to compare the actual outcomes to those obtained in training and validation. Any significant discrepancies would call for further inspection. Training and education can also go a long way in safeguarding proper use of AI. It’s vital that every user has a keen understanding of the strengths and limitations of a specific AI-enabled solution.

4. Fairness

AI-enabled solutions should be developed and validated using data that is representative of the target group for the intended use, while avoiding bias and discrimination. Another priority is to make sure that AI is fair and free of bias. We should be mindful that AI is only as objective as the data we feed into it. Bias could easily creep in if an algorithm were developed and trained based on a specific subset of the population, and then applied to a broader population. Take Spontaneous Coronary Artery Dissection (SCAD): a rare and often fatal condition that causes a tear in a blood vessel of the heart. Eighty percent of SCAD cases involve women, yet women are typically underrepresented in clinical trials investigating SCAD [3]. If we were to develop an AI-powered clinical decision support tool based on data from those clinical trials, it would learn from predominantly male data. As a result, the tool may not fully capture the intricacies of the disease in women – perpetuating the bias that was already present in the trials. Similar risks arise when an AI algorithm is developed in one region of the world and then applied in another region without revalidating it on local data first. For example, cardiovascular conditions typically affect people from India 10 years earlier than people of European ancestry [4]. Therefore, if an algorithm has been trained on European people only, it may fail to pick up early signs of cardiovascular disease in Indian people. How to prevent such biases? First, development and validation of AI must be based on data that accurately represent the diversity of people in the target group. When AI is applied to a different target group, it should be revalidated – and possibly retrained – first.

Development and validation of AI must be based on data that accurately represent the diversity of people in the target group.

From my experience at Philips, having a diverse organization – with research teams in China, the US, India, and Europe – also helps to create awareness of the importance of fairness in AI. Diversity in backgrounds fosters an inclusive mindset.

5. Transparency

AI-enabled solutions require disclosure on which functions and features are AI-enabled, the validation process, and the responsibility for ultimate decision-taking. As a fifth and final principle, public trust and wider adoption of AI in healthcare will ultimately depend on transparency. Whenever AI is applied in a solution, we need to be open about this and disclose how it was validated, which data sets were used, and what the relevant outcomes were. It should also be clear what the role of the healthcare professional is in making a final decision. But perhaps most importantly, optimal transparency can be achieved by developing AI-enabled solutions in partnership. By forging close collaboration between different actors in the healthcare system – providers, payers, patients, researchers, regulators – we can jointly uncover and address the various needs, demands, and concerns that should all be taken into consideration when developing AI-enabled solutions. Not as an afterthought, but right from the start, in an open and informed dialogue.

Shaping the future of AI in healthcare together

Taken together, I believe these five principles can help pave the way towards responsible use of AI in healthcare and personal health applications. Yet I also realize that none of us has all the answers. New questions will arise as we continue on this exciting path of discovery and innovation with AI, and I look forward to discussing them together. Read more about the Philips AI Principles References [1] Guo J, Li B. The Application of Medical Artificial Intelligence Technology in Rural Areas of Developing Countries. Health Equity. 2018;2(1):174–181. [2] Cooper G, Aliferis C, Ambrosino R, et al. An evaluation of machine-learning methods for predicting pneumonia mortality. Artificial Intelligence in Medicine. 1997;9(2):107–138. [3] Perdoncin E, Duvernoy C. Treatment of Coronary Artery Disease in Women. Cardiovasc J. 2017;13(4):201–208. [4] Prabhakaran D, Jeemon P, Roy A. Cardiovascular Diseases in India: Current Epidemiology and Future Directions. Circulation. 2016;133(16):1605-20.

Share on social media

Topics

Author

Henk van Houten

Former Chief Technology Officer at Royal Philips from 2016 to 2022