I’m a firm believer in the promise of artificial intelligence (AI): a tool that, if used in a smart way, can give healthcare providers superpowers by putting the right information at their fingertips in the moments that truly matter to them and their patients. Yet I also believe that in order to deliver on that promise, we must focus on people – not technology – first.

In conversations with healthcare leaders around the world, one challenge that typically comes up is the difficulty of finding enough specialized physicians to meet the growing need for care. At that point I like to ask: what if we could make your existing physicians twice as effective and efficient, enabling them to make better decisions while spending more time with patients?

That’s essentially the promise of AI – whether it’s through automating mundane chores that take away a physician’s focus from the patient, or by providing clinical decision support in the moments that ultimately have the power to lead to better health outcomes.

And yet, for all its technological advances, surprisingly few AI applications have successfully made their way from the research lab into clinical practice. What’s holding AI in healthcare back?

Overcoming barriers to AI adoption

Issues around data management and interoperability are often portrayed as the main bottlenecks for wider AI adoption, and with good reason. But in the hectic and stressful day-to-day reality of medical professionals, there are other factors at play, too. Human factors.

Physicians often work on tight schedules, relying on routines built up over years of experience. If AI doesn’t seamlessly fit into their workflows, or worse, if it creates additional complexity, physicians will have a hard time embracing it. And in a high-stakes environment like healthcare, where physicians have to make split-second decisions with potentially far-reaching consequences, trust in new technology can be hard to gain. Would you rely on an algorithm’s recommendation when a patient’s life is in your hands? Both poor workflow fit and lack of trust have shown to hamper uptake of AI [1,2].

Earlier healthcare innovations faced some of the same adoption challenges. Just think of the Electronic Medical Record (EMR). Originally touted as the future of healthcare, it is now widely acknowledged that EMRs were not sufficiently designed with physicians’ needs and experiences in mind, with doctors lamenting they are “trapped behind their screens” [3, 4]. This time, with AI fueling the next wave of digital innovation, we must learn from the mistakes of the past, and take a radically different approach. One that puts people front and center. Not as an afterthought, but right from the beginning.

Physicians and AI: the new augmented medical team

What healthcare needs is human-centered AI: artificial intelligence that is not merely driven by what is technically feasible, but first and foremost by what is humanly desirable. This means we need to combine data science and technical expertise with a strong understanding of the clinical context in which physicians operate as well as the cognitive and emotional demands of their daily reality.

Thinking of physicians and AI as a collaborative system is essential here. While AI might increasingly outperform physicians in specific tasks, AI and deep clinical knowledge need to go hand in hand because both have their unique strengths and limitations. Getting this complementary relationship right is what will ultimately determine the adoption and impact of AI in healthcare.

What healthcare needs is human-centered AI: artificial intelligence that is not merely driven by what is technically feasible, but first and foremost by what is humanly desirable.

We already see the early contours of human-AI collaboration in radiology; a discipline that has always been at the forefront of digital transformation in healthcare, and which is now a fertile hotbed for AI innovation. With radiologists and imaging staff being in short supply in many parts of the world, facing ever-increasing workloads, they could certainly benefit from having a smart digital assistant.

But how do we ensure that AI complements these human experts such that together they achieve more than either could alone? Clearly, the answer isn’t going to present itself on a silver platter if we focus on technology in isolation.

I have long believed that in order to deliver meaningful innovation to people, you need to innovate with them rather than for them. As I argued at the Fortune Brainstorm Design conference some time ago, building algorithms in the confines of a research lab in itself will not bring us human-centered AI. Instead, we must first get out there and submerge ourselves into the daily reality of the people whose lives we are trying to improve.

That’s exactly what we are doing with our clinical and academic partners, such as the Catharina Hospital and the Eindhoven University of Technology (as part of our joint e/MTIC collaboration [i]) and Leiden University Medical Center in the Netherlands, conducting 360-degree on-site workflow analyses and co-creation sessions with medical experts to look beyond the technology itself and understand the full context of use.

Through this work, we are beginning to uncover the experience drivers that foster strong physician-AI collaboration in radiology. And what has become abundantly clear is that designing for human-centered AI involves much more than coming up with a superior user interface.

What’s technically possible may not be what’s actually needed

First and foremost, we need to ask ourselves: what kind of AI would be useful in the first place? This seems like an obvious question, but AI applications often fail to translate to clinical practice precisely because the underlying need or pain point has been ill-defined.

For example, if an image analysis algorithm is merely able to identify abnormalities that a radiologist can spot at a glance, its added value may be limited despite its strong technical performance. Or let’s say you have an algorithm that helps measure tumor size: it may be slightly more accurate than a human observer, but if the difference is so small that it doesn’t practically impact treatment decisions, there is no real patient benefit.

Instead, if we take a systems thinking view, starting from the needs of the radiologist and other stakeholders in the radiology workflow, including the patient and the radiology technician who performs the exam, we can identify opportunities where AI will have the biggest added value. Those could be at any stage in the workflow.

For example, early in the workflow, AI can help to optimize patient scheduling so that patients get seen as quickly as possible. Or, it can assist radiology technicians in the selection and preparation of the appropriate imaging exams for a specific patient to avoid unnecessary rescans. Once images have been acquired, AI can support radiologists in various ways, for example by pointing them to incidental findings that are easy to overlook, or by saving them time through automatic integration of findings into the report.

There are plenty more use cases one could think of, some of which we are actively exploring with our clinical partners. Identifying pain points and mapping opportunities along the entire workflow is a great way to kick off an AI innovation program, because it creates a shared focus on the value that AI can bring at every step.

AI should be an integral part of the workflow

While defining the intended added value of AI is a vital starting point, it’s the way AI fits into the workflow – and can ultimately help to streamline it – that will make or break adoption in clinical practice.

If there’s one thing we’ve learned through our collaborations with radiology partners, it’s that what may seem like a useful application on paper can actually be a burden to the radiologist if it feels like “adding yet another thing” to their workflow. Radiologists work in a complex and time-pressured environment, running different software applications in parallel on multiple screens. That’s why it’s really important that we embed AI applications into their existing imaging and informatics solutions, making information easily available at their fingertips, rather than adding to a patchwork of point solutions.

But we must think even more holistically about workflow integration, looking at the diagnostic system at large. For example, AI could do a preliminary analysis of a patient’s CT scan to flag worrisome cases that require immediate follow-up with a PET-CT scan. In itself this would be a very useful application. It would only work, however, if space can be made available in the PET-CT schedule that same day, which presents a new workflow challenge (one that AI may be able to support with as well!). As this example shows, you need to look at the entire hospital’s way of working, not just individual workflow elements.

AI should instill appropriate trust that matches its capabilities

Another design challenge posed by AI, which I alluded to before, is getting physicians to trust its output and recommendations. With the rise of deep learning, AI systems have become much more accurate and powerful – but they have also become increasingly hard to interpret, calling for a leap of faith from the user. Trust is critical for adoption, but hard to gain. Trust is also easily lost when the AI system gives a few faulty recommendations, even if it performs highly accurate on average.

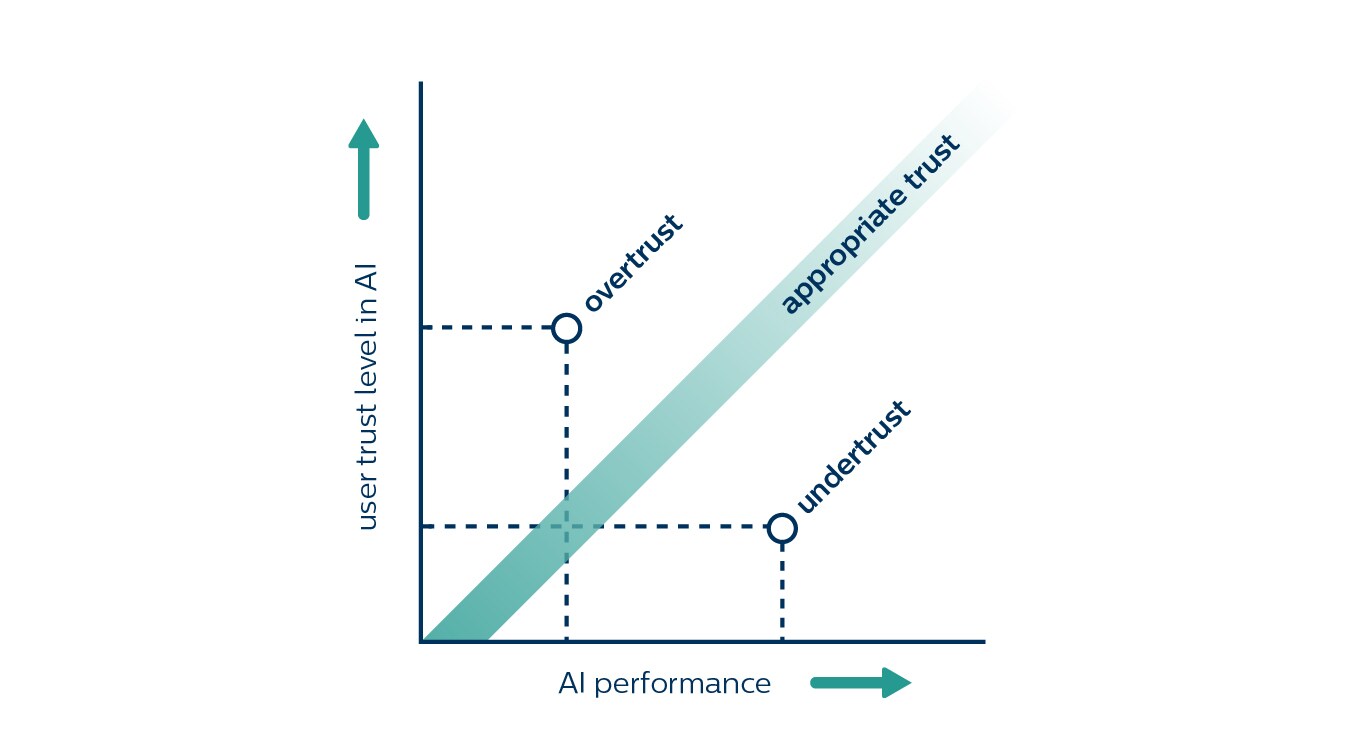

And the tricky thing is that when it comes to trust, there can be too much of a good thing as well. You don’t want a radiologist to blindly fare on an algorithm’s findings when the algorithm sometimes misses a disease. What you want is appropriate trust that matches the AI’s capabilities. This means navigating a thin line between undertrust and overtrust.

Undertrust occurs when trust falls short of the AI’s actual capabilities. Several studies have shown how radiologists overlooked small cancers identified by the AI [5,6]. On the other extreme, overtrust leads to over-reliance on AI, causing radiologists to miss cancers that the AI failed to identify [7].

How do you help radiologists find the right balance between trust and vigilance?

First, it is essential they understand what an AI system can and cannot do, what data it was trained on, and what it has been optimized for. For example, an algorithm can be optimized to spot small tumors in the lungs, but it might therefore overlook bigger ones. This is not at all intuitive to the radiologist and requires active communication to prevent distrust. Radiologists will also want to know how well the AI does what it supposed to do, calling for transparency on its validation and performance.

In addition, we need to consider how we can make AI’s findings as understandable as possible and needed. While interpretability may not be as important when the stakes are low, in a high-stakes situation the black-box nature of an AI system can be an impediment to trust. Together with our clinical collaborators and academic partners such as the Eindhoven University of Technology, we are investigating various ways of addressing this problem – either by explaining the AI’s design philosophy in general, or by providing the rationale for individual recommendations.

Putting people first

Of course, physician perceptions of AI will continue to evolve as its capabilities mature. Just like a junior resident that first joins a team, AI needs to demonstrate its added value and earn trust over time.

With AI as a trusted collaborator that is seamlessly embedded into the workflow, radiologists will be freed from mundane tasks and able to spend more time on complex cases, increasing their impact on clinical decision-making. Healthcare professionals in other areas of medicine will increasingly benefit from AI assistance, too – whether it’s in digital pathology, acute care, image-guided therapy, or chronic disease management. I firmly believe it will make their work more valuable and rewarding, not less.

But as I have hoped to show, that future won’t happen by itself. It will only happen by design.

Designers must take an active role from the very start of AI development, working alongside data scientists, engineers, and clinical experts to create AI-enabled experiences that make a positive difference to healthcare professionals and patients alike.

Only then will we see AI in healthcare fully deliver on its promise; if we put people, not technology, first.

Note

[i] e/MTIC or Eindhoven MedTech Innovation Center is a research collaboration between Eindhoven University of Technology, Catharina Hospital, Maxima Medical Center, Kempenhaeghe Epilepsy and Sleep Center, and Philips.

References

[1] Christopher J. Kelly, Alan Karthikesalingam, Mustafa Suleyman, Greg Corrado, and Dominic King. 2019. Key challenges for delivering clinical impact with artificial intelligence. BMC Medicine 17, 1: 195. https://doi.org/10.1186/s12916-019-1426-2

[2] Lea Strohm, Charisma Hehakaya, Erik R. Ranschaert, Wouter P. C. Boon, and Ellen H. M. Moors. 2020. Implementation of artificial intelligence (AI) applications in radiology: hindering and facilitating factors. European Radiology. https://doi.org/10.1007/s00330-020-06946-y

[3] Atul Gawande. 2018. Why doctors hate their computers. New Yorker. https://www.newyorker.com/magazine/2018/11/12/why-doctors-hate-their-computers

[4] Robert M. Wachter. 2015. The digital doctor: hope, hype, and harm at the dawn of medicine’s computer age.

[5] D. W. De Boo, M. Prokop, M. Uffmann, B. van Ginneken, and C. M. Schaefer-Prokop. 2009. Computer-aided detection (CAD) of lung nodules and small tumours on chest radiographs. European Journal of Radiology 72, 2: 218–225. https://doi.org/10.1016/j.ejrad.2009.05.062

[6] Robert M. Nishikawa, Alexandra Edwards, Robert A. Schmidt, John Papaioannou, and Michael N. Linver. 2006. Can radiologists recognize that a computer has identified cancers that they have overlooked? In Medical Imaging 2006: Image Perception, Observer Performance, and Technology Assessment, 614601. https://doi.org/10.1117/12.656351

[7] W. Jorritsma, F. Cnossen, and P. M. A. van Ooijen. 2015. Improving the radiologist–CAD interaction: designing for appropriate trust. Clinical Radiology 70, 2: 115–122. https://doi.org/10.1016/j.crad.2014.09.017

[8] John D. Lee and Katrina A. See. 2004. Trust in automation: designing for appropriate reliance. Human Factors 46, 1: 50-80. https://doi.org/10.1518/hfes.46.1.50_30392

Share on social media

Topics

Author

Sean Carney

Former Chief Experience Design Officer at Royal Philips from 2011 to 2022